Original Link: https://www.anandtech.com/show/9185/intel-xeon-d-review-performance-per-watt-server-soc-champion

The Intel Xeon D Review: Performance Per Watt Server SoC Champion?

by Johan De Gelas on June 23, 2015 8:35 AM EST- Posted in

- CPUs

- Intel

- Xeon-D

- Broadwell-DE

The days that Intel neglected the low end of the server market are over. The most affordable Xeon used to be the Xeon E3: a desktop CPU with a few server features enabled and with a lot of potential limitations unless you could afford the E5 Xeons. The gap, both in performance and price, between Xeon E3 and E5 is huge. For example - a Xeon E5 can address up to 768 GB and the Xeon E3 up to 32 GB. A Xeon E5 server could contain up to 36 cores, whereas Xeon E3 was limited to a paltry four. And the list is long: most RAS features, virtualization features were missing from the E3, along with a much smaller L3-cache. On those terms, the Xeon E3 simply did not feel very "pro".

Luckily, the customers in the ever expanding hyperscale market (Facebook, Amazon, Google, Rackspace and so on) need Xeons at a very large scale and have been demanding a better chip than the Xeon E3. Just a few months ago, the wait was over: Xeon D fills the gap between the Xeon E3 and the Xeon E5. Combining the most advanced 14 nm Broadwell cores, a dual 10 gigabit interface, a PCIe 3.0 root with 24 lanes, USB and SATA controllers in one integrated SoC, the Xeon D has excellent specs on paper for everyone who does not need the core count of the Xeon E5 servers, but who simply needs 'more' than the Xeon E3.

Many news editors could not resist calling the Xeon D a response to the ARM server threat. After all, ARM has repeated more than once that the ambition is to be competitive in the scale-out server market. The term "micro server" is hard to find on the power point slides these days; the "scale-out" market is a lot cooler, larger and more profitable. But the comments of the Facebook engineers can quickly brings us back to reality:

"Introducing "Yosemite": the first open source modular chassis for high-powered microservers"

"We started experimenting with SoCs about two years ago. At that time, the SoC products on the market were mostly lightweight, focusing on small cores and low power. Most of them were less than 30W. Our first approach was to pack up to 36 SoCs into a 2U enclosure, which could become up to 540 SoCs per rack. But that solution didn't work well because the single-thread performance was too low, resulting in higher latency for our web platform. Based on that experiment, we set our sights on higher-power processors while maintaining the modular SoC approach."

It is pretty simple: the whole "low power simple core" philosophy did not work very well in the real scale out (or "high powered micro server") market. And the reality is that the current SoCs with an ARM ISA do not deliver the necessary per core performance: they are still micro server SoCs, at best competing with the Atom C2750. So currently, there is no ARM SoC competition in the scale out market until something better hits the market for these big players.

Two questions remain: how much better is the 2 GHz Xeon D compared to the >3GHz Xeon E3? And is it an interesting alternative to those that do not need the high end Xeon E5?

Broadwell in a Server SoC

In a nutshell, the Xeon D-1540 is two silicon dies in one highly integrated package. Eight 14 nm Broadwell cores, a shared L3-cache, a dual 10 gigabit MAC, a PCIe 3.0 root with 24 lanes find a home in the integrated SoC whereas in the same package we find four USB 3.0, four USB 2.0, six SATA3 controllers and a PCIe 2.0 root integrated in a PCH chip.

The Broadwell architecture brings small microarchitectural improvements - Intel currently claims about 5.5% higher IPC in integer processing. Other improvements include slightly lower VM exit/enter latencies, something that Intel has been improving with almost every recent generation (excluding Sandy Bridge).

Of course, if you are in the server business, you care little about all the small IPC improvements. Let us focus on the large relevant improvements. The big improvements over the Xeon E3-1200 v3 are:

- Twice as many cores and threads (8/16 vs 4/8)

- 32 GB instead of 8 GB per DIMM supported and support for DDR4-2133

- Maximum memory capacity has quadrupled (128 GB vs 32 GB)

- 24 PCIe 3.0 lanes instead of 16 PCIe 3.0 lanes

- 12 MB L3 rather than 8 MB L3

- No separate C22x chipset necessary for SATA / USB

- Dual 10 Gbit Ethernet integrated ...

And last but not least, RAS (Reliability, Availability and Servicability) features which are more similar to the Xeon E5:

The only RAS features missing in the Xeon D are the expensive ones like memory mirroring. Those RAS features a very rarely used, and The Xeon D can not offer them as it does not have a second memory controller.

Compared to the Atom C2000, the biggest improvement is the fact that the Broadwell core is vastly more advanced than the Silvermont core. That is not all:

- Atom C2000 had no L3-cache, and are thus a lot slower in situation where the cores have to sync a lot (databases)

- No support for USB 3 (Xeon D: four USB 3 controllers)

- As far as we know Atom C2000 server boards were limited to two 1 Gbit PHYs (unless you add a separate 10 GBe controller)

- No support for PCIe 3.0, "only" 16 PCIe Gen2 lanes.

There are more subtle differences of course such as using a crossbar rather than a ring, but those are beyond the scope of this review.

Supermicro's SuperServer 5028D-TN4T

Supermicro has always been one of the first server vendors that integrates new Intel technology. The Supermicro SYS-5028D-TN4T is a mini-tower, clearly targeted at Small Businesses that still want to keep their server close instead of in the cloud, which is still a strategy that makes sense in quite a few situations.

The system features four 3.5 inch hot swappable drive bays, which makes it easy to service the component that fails the most in a server system: the magnetic disks.

That being said, we feel that the system falls a bit short with regards to serviceability. For example replacing DIMMs or adding an SSD (in one of fixed 2.5 inch bays) requires you to remove some screws and to apply quite a bit of force to remove the cover of the chassis.

Tinkering with DIMMs under the storage bays is also a somewhat time consuming experience. You can slide out the motherboard, but that requires to remove almost all cabling. Granted, most system administrators will rarely replace SSDs or DIMMs. But the second most failing component is the PSU, which is not easy swappable either but attached with screws to chassis.

On the positive side, an AST2400 BMC is present and allows you to administer the system remotely via a dedicated Ethernet interface. Supermicro also added an Intel i350 dual gigabit LAN controller. So you have ample networking resources: one remote control ethernet port, two gigabit and two 10 gigabit (10GBase-T) ports, courtesy of the Xeon-D integrated 10 GbE Ethernet MAC.

Inside the 5028D-TN4T

Inside the 5028D-TN4T, we find the mini-ITX X10SDV-TLN4F motherboard.

The logical layout of the board means that you need very few additional building blocks to make a server system with the Xeon D.

The X10SDV-TLN4F consists of Xeon D-1540, the BMC AST2400, a few PCIe switches and the Intel i350 gigabit chip. The AST2400 is a pretty potent BMC which can send 1920x1200 compressed pixels over a 1 Gbit Ethernet PHY. The 400MHz ARM926EJ embedded CPU was connected via a 16 bit interface to a 1 GB, 667 MHz Winbond Electronics W631GG6KB-15 DDR3-SDRAM. Impressive specs for a BMC.

Benchmark Configuration

All tests were done on Ubuntu Server 14.04 LTS (soon to be upgraded to 15.04). Aside from the SuperMicro Xeon-D system, we also have the ASRock Rack C2750D4I (eight core Silvermont), a Xeon E3-1200 v3 system, a Xeon E3-1200 v2 system, a 1P Xeon E5-2600L v3 and a HP Moonshot cartridge based system. We tested the HP Moonshot cartridges remotely.

Supermicro's 5028D-TN4T

| CPU | Xeon D-1540 2.0 GHz |

| RAM | 4x16GB DDR4-2133 |

| Internal Disks | Samsung 850 Pro 128 GB |

| Motherboard | SuperMicro X10SLD-F |

| PSU | FSP250-50LC (250 W, 80+ Bronze) |

Below you can find most of the CPU settings in the BIOS:

ASRock's C2750D4I

| CPU | Intel Atom C2750 2.4 GHz |

| RAM | 4x8GB DDR3-1600 |

| Internal Disks | Samsung 850 Pro 128 GB |

| Motherboard | ASRock C2750D4I |

| PSU | Supermicro PWS-502 (80+) |

The Xeon D is not a replacement for the Atom C2000. Although the Xeon D is also a SoC, the Atom C2000 remains Intel low power options for microservers. Of course, we want to know how much power you save, and how large the performance trade-off is.

Intel's Xeon E3-1200 v3 – ASUS P9D-MH

| CPU | Intel Xeon processor E3-1240 v3 3.4 GHz Intel Xeon processor E3-1230L v3 1.8 GHz |

| RAM | 4x8GB DDR3-1600 |

| Internal Disks | 1x Samsung 850 Pro 128 GB |

| Motherboard | ASUS P9D-MH |

| PSU | Supermicro PWS-502 (80+) |

As the Xeon D is limited to 2 GHz (2.6 GHz turboboost), higher clocked Xeon E3s might still make sense where single threaded performance is a major concern. The Xeon E3-1230L was included as a low power alternative, although we wonder it still make sense, considering that the Xeon E3 needs a separate 1-4W chipset (C220).

Intel's Xeon E3-1200 v2

| CPU | Intel Xeon processor E3-1265L v2 |

| RAM | 4x8GB DDR3-1600 |

| Internal Disks | 1x Intel MLC SSD710 200GB |

| Motherboard | Intel S1200BTL |

| PSU | Supermicro PWS-502 (80+) |

The previous generation low power Xeon E3.

Intel's Xeon E5 Server – "Wildcat Pass" (2U Chassis)

| CPU | One Intel Xeon processor E5-2650L v3 (1.8GHz, 12c, 30MB L3, 65W) |

| RAM | 128GB (8x16GB) Samsung M393A2G40DB0 (RDIMM) |

| Internal Disks | 2x Intel MLC SSD710 200GB |

| Motherboard | Intel Server Board Wildcat Pass |

| PSU | Delta Electronics 750W DPS-750XB A (80+ Platinum) |

Although our E5 server is not comparable to the other systems, it important to gauge where a low power E5 model would land. We like to understand when it make sense to invest more money in an Xeon E5 system, and here we only use one Xeon. Note that this system also requires power from a separate PCH.

HP Moonshot

More info about this configuration can be found in our previous article about micro server SoCs.

We tested two different cartridges: the m400 and the m300. Below you can find the specs of the m400:

| CPU/SoC | AppliedMicro X-Gene 2.4 |

| RAM | 8x 8GB DDR3 @ 1600 |

| Internal Disks | M.2 2280 Solid State 120GB |

| Cartridge | m400 |

And the m300:

| CPU/SoC | Atom C2750 2.4 |

| RAM | 8x 8GB DDR3 @ 1600 |

| Internal Disks | M.2 2280 Solid State 120GB |

| Cartridge | m300 |

Other Notes

Both servers are fed by a standard European 230V (16 Amps max.) power line. The room temperature is monitored and kept at 23°C by our Airwell CRACs. We use the Racktivity ES1008 Energy Switch PDU to measure power consumption in our lab. We used the HP Moonshot ILO to measure the power consumption of the cartridges.

Memory Subsystem: Bandwidth

As more memory channels complicate motherboard design and can be a problem for dense servers, the Xeon-D, Xeon E3 and Atom C2000 only have two memory channels. This makes quad channel operation a good way to differentiate up to the Xeon E5. The Xeon E3 and Atom are limited to DDR3-1600 as per JEDEC specifications, whereas the Xeon D should be able to command more bandwidth due to the use of DDR4-2133 DIMMs.

We measured the memory bandwidth in Linux. The binary was compiled with the Open64 compiler 5.0 (Opencc). It is a multi-threaded, OpenMP based, 64-bit binary. The following compiler switches were used:

-Ofast -mp -ipa

To keep things simple, we only report the Triad sub-benchmark of our OpenMP enabled Stream benchmark.

Using DIMMs with a 33% higher clock, the Xeon D gets a 25-38% boost in bandwidth compared to the Xeon E3. Basically, every percent increase in clock speed is translated in higher bandwidth. The Xeon E5 has almost twice as much bandwidth for only 50% more cores and should as result do better in some bandwidth intensive applications (mostly HPC).

Memory Subsystem: Latency

To measure latency, we use the open source TinyMemBench benchmark. The source was compiled for x86 with gcc 4.8.2 and optimization was set to "-O2". The measurement is described well by the manual of TinyMemBench:

Average time is measured for random memory accesses in the buffers of different sizes. The larger the buffer, the more significant the relative contributions of TLB, L1/L2 cache misses, and DRAM accesses become. All the numbers represent extra time, which needs to be added to L1 cache latency (4 cycles).

We tested with dual random read, as we wanted to see how the memory system coped with multiple read requests. To keep the graph readable we limited ourselves to the CPUs that were different.

L3 caches have increased significantly the past years, but it is not all good news. The L3 cache of the Xeon E3 responds very quickly (about 10 ns or less than 30 cycles at 2.8 GHz) while the L3-cache of the new generation needs almost twice as much time to respond (about 20 ns or 50 cycles at 2.6 GHz). Larger L3 caches are not always a blessing and can result in a hit to latency - there are applications that have a relatively small part of cacheable data/instructions such as search engines and HPC application that work on huge amounts of data.

It gets worse for the "large L3 cache" models when we look at latency of accessing memory (measured at 64 MB):

The higher L3-cache latency makes memory accesses more costly in terms of latency for the Xeon E5. Despite having access to DDR4-2133 DIMMs, the Xeon E5-2650L accesses memory slower than the Xeon E3-1230L. It is also a major weakness of the Atom C2750 which has much less sophisticated memory controller/prefetching.

Single-Threaded Integer Performance

The LZMA compression benchmark only measures a part of the performance of some real-world server applications (file server, backup, etc.). The reason why we keep using this benchmark is that it allows us to isolate the "hard to extract instruction level parallelism (ILP)" and "sensitive to memory parallelism and latency" integer performance. That is the kind of integer performance you need in most server applications.

One more reason to test performance in this manner is that the 7-zip source code is available under the GNU LGPL license. That allows us to recompile the source code on every machine with the -O2 optimization with gcc 4.8.2.

The Xeon E5-2650L Haswell core is only able to boost to 2.5 GHz, while the Xeon D has a newer core (Broadwell) and is capable of 2.6 GHz. Still, the Xeon E5 is 6% faster. The most likely explanation is that the Xeon E5-2650L (65W TDP) keeps turboboost higher for a longer time than the Xeon D (45W TDP).

The Xeon D and Atom C2750 run at the same clockspeed in this single threaded task (2.6 GHz), but you can see how much difference a wide complex architecture makes. The Broadwell Core is able to run about twice as many instructions in parallel as the Silvermont core. The Haswell/Broadwell core results clearly show that well designed wide architectures remain quite capable, even in "low ILP" (Instruction Level Parallelism) code.

Let's see how the chips compare in decompression. Decompression is an even lower IPC (Instructions Per Clock) workload, as it is pretty branch intensive and depends on the latencies of the multiply and shift instructions.

The Xeon E5 runs at 2.5 GHz, the Xeon D at 2.6 GHz, the Xeon E3-1230L at 2.8 GHz, The Xeon E3-1265L can reach 3.7 GHz. The decompression results follow the same logic. There does not seem to be a difference between a Broadwell, Haswell or Ivy Bridge core: performance is almost linear with (turboboost) clockspeed. The only exception is the Xeon E3-1240 which turboboost to 3.8 GHz, but outperforms the other by a larger than expected. The explanation is pretty simple: the higher TDP (80 W) allows the chip to sustain turbo boost clock speeds for much longer.

Multi-Threaded Integer Performance

Next we run the same workload in several active instances to see how well the different CPUs scale. The Xeon E5 and Xeon D should finally be able to show off their higher core counts.

The Xeon D scales well: performance is multiplied by 8. It is interesting to note that it delivers 82% of the raw integer processing power of the low power Xeon E5, which has 50% more cores and a 44% higher TDP (65W).

We did not test the Xeon E3-1265L v3 (45 W), but the 3.1 GHz chip will end up between the E3-1265L v2 and E3-1240 v3 (3.4 GHz), it will probably score something like 19000. The Xeon D delivers thus no less than 50% more raw processing power at the same TDP, while integrating more functionality. This should further drive down the total power a server uses. This really shows what an excellent improvement the Xeon D is if you can use the 16 threads.

The decompression benchmark tell us the same story as the compression test: the Xeon D delivers.

Compiling with gcc

A more real world benchmark to test the integer processing power of our Xeon servers is a Linux kernel compile. Although few people compile their own kernel, compiling other software on servers is a common task and this will give us a good idea of how the CPUs handle a complex build.

To do this we have downloaded the 3.11 kernel from kernel.org. We then compiled the kernel with the "time make -jx" command, where x is the maximum number of threads that the platform is capable of using. To make the graph more readable, the number of seconds in wall time was converted into the number of builds per hour.

The Xeon D delivers at least 65% better performance than the Xeon E3s. Considering the low TDP, that is pretty amazing. The Xeon E5 delivers 30% more with 50% more cores - as the Xeon E5 is twice as expensive, the Xeon D holds a massive performance per dollar advantage. The brawny Broadwell cores of the Xeon D compile no less than 3.7 times faster than the small Silvermont cores of the Atom, meaning that compiling definitely favors the more sophisticated cores.

If you regularly compile large projects, the Xeon D is one of the best choices you have - even compared to a highly clocked Core i7 solutions. The cheaper quad core i7s will perform like the Xeon E3-1240, the equally priced ($583) i7-5930k will do about 50% better, still below the Xeon D. The Xeon D offers you integrated dual 10 Gb Ethernet, SATA, USB, which should offer lower latency. The Xeon D can also support twice as much memory (128 GB vs 64 GB) and offer you a much lower power bill (45W vs 140W TDP), making hardware decisions around compilation based projects an easy choice to make.

OpenFoam

Computational Fluid Dynamics is a very important part of the HPC world. Several readers told us that we should look into OpenFoam and calculating aerodynamics that involves the use of CFD software.

We use a realworld test case as benchmark. All tests were done on OpenFoam 2.2.1 and openmpi-1.6.3.

We also found AVX code inside OpenFoam 2.2.1, so we assume that this is one of the cases where AVX improves FP performance.

HPC code is where the Xeon E5 makes a lot more sense than the cheaper Xeons. The Xeon E5 is no less than 80% faster with 50% more cores than the Xeon D. In this case, the Xeon D does not make the previous Xeons E3 look ridiculous: the Xeon D runs the job about 33% faster. Let us zoom in.

OpenFoam scales much better on the Xeon E5, and we've seen previously that a second CPU boost performance by 90% offering near linear scaleability. Double the number of cores again and you get another very respectable 60%. Eight cores are 34% faster than four, and 4.1 times faster than one.

Compares this to the horrible scaling of the Xeon E3 v2: 4 cores are slower than one. The Xeon E3 v3 fixed that somewhat, and doubles the performance over the same range. The eight cores of the Xeon D are about 2.8 times faster than one - that is decent scaling but nowhere near the Xeon E5. There are several reasons for this, but the most obvious one is that the Xeon E5 really benefits from the fact that it has almost twice the amount of bandwidth available. To be fair, Intel does not list HPC as a target market for the Xeon D. If the improved AVX2 capabilities and the pricing might have tempted you to use the Xeon D in your next workstation/HPC server, know that the Xeon D can not always deliver the full potential of the 8 Broadwell cores, despite having access to DDR4-2133.

Java Server Performance

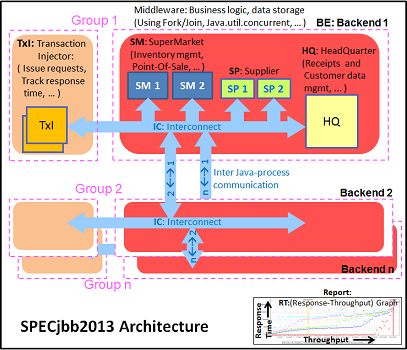

The SPECjbb 2013 benchmark has "a usage model based on a world-wide supermarket company with an IT infrastructure that handles a mix of point-of-sale requests, online purchases, and data-mining operations." It uses the latest Java 7 features and makes use of XML, compressed communication, and messaging with security.

We tested with four groups of transaction injectors and back-ends. We applied relatively basic tuning to mimic real-world use. We used this JVM configuration setting for the systems limited to 32 GB (all Xeon E3):

"-server -Xmx4G -Xms4G -Xmn2G -XX:+AlwaysPreTouch -XX:+UseLargePages"

With these settings, the benchmark takes about 20-27GB of RAM. For the servers that could address 64 GB or more (Atom, Xeon D and Xeon E5), we used a slightly beefier setting:

"-server -Xmx8G -Xms8G -Xmn4G -XX:+AlwaysPreTouch -XX:+UseLargePages"

With these settings, the benchmark takes about 43-57GB of RAM. The first metric is basically maximum throughput.

As long as you run enough JVMs on top your server, the Xeon D and Xeon E5 will not dissapoint. The Xeon D is at least 37% faster than the previous Xeon E3 generation, the Xeon E5 delivers 50% more.

The Critical-jOPS metric is a throughput metric under response time constraint.

The Xeon D seems to be slightly hindered by the lack of memory bandwidth in the max throughput benchmark, but less than in our HPC benchmark. It is important to understand that maximum throughput is very important in a HPC benchmark, but for a Java based back-end server, the critical benchmark matters much more than the maximum one. The reason is simple: the critical benchmark tells you what your customers will experience on a daily basis, the maximum throughput benchmark descibes what you will get in the worst case scenario when your server is pushed to its limits.

In the critical benchmark, the Xeon D is at least 65% faster than any Xeon E3. The Broadwell core is a minor improvement over the Haswell core when you look at performance only (single threaded integer performance), but once it is integrated in a chip like the Xeon D, it is astonishing how much performance per watt you get. A 60-70% increase in performance per watt is a rare thing indeed.

SPECJBB®2013 is a registered trademark of the Standard Performance Evaluation Corporation (SPEC).

Web Server Performance

Websites based on the LAMP stack - Linux, Apache, MySQL, and PHP - are very popular. Few people write html/PHP code from scratch these days, so we turned to a Drupal 7.21 based site. The web server is Apache 2.4.7 and the database is MySQL 5.5.38 on top of Ubuntu 14.04 LTS.

Drupal powers massive sites (e.g. The Economist and MTV Europe) and has a reputation of being a hardware resource hog. That is a price more and more developers happily pay for lowering the time to market of their work. We tested the Drupal website with our vApus stress testing framework and increased the number of connections from 5 to 300.

We report the maximum throughput achievable with 95% percent of request being handled faster than 1000 ms.

Let us be honest: the graph above is not telling you everything. The truth is that, on the Xeon D and Xeon E5, we ran into several other bottlenecks (OS and Database related) before we ever could measure a 1000 ms 95th percentile response time. So the actual throughput at 1 second response time is higher.

Basically, the performance of the Xeon D and Xeon E5 was too high for our current benchmark setup. Let us zoom in a bit to get a more accurate picture. The picture below shows you the 95th percentile of the response time (Y-axis) versus the amount of concurrent requests/users (X-axis). We did not show the results of the Atom C2750 beyond 200 req/s to keep the graph readable.

We warm up the machine with 5 concurrent requests, but that is not enough for some servers. Notice that the response time of the Xeon D between 50 and 200 requests per second is lower than at 25 request per second. So let us start our analyses at 50 request per second.

The Xeon E3-1230L clock speed fluctuates between 1.8, 2.3 and 2.8 GHz. It is amazing low power chip, but you pay a price: the 95th percentile never goes below 100 ms. The highly clocked Xeon E3s like the 1240 keeps the response time below 100 ms unless your website is hit more than 100 times per second.

The Xeon D once again delivers astonishing performance. Unless the load is more than 200 concurrent requests per second, the server responds within 100 ms. There is more. Imagine that you want to keep your 95th percentile. response time below half a second. With a previous generation Xeon E3, even the 80W chip will hit that limit at around 200-250 requests per second. The Xeon D sustains about 800 (!) requests per second (not shown on graph) before a small percentage of the users will experience that response time. In other words, you can sustain up to 4 times as manyhits with the Xeon D-1540 compared to the E3.

Scale-Out Big Data Benchmark: ElasticSearch

ElasticSearch is an open source, full text search engine that can be run on a cluster relatively easy. It's basically like an open source version of Google Search that can be deployed in an enterprise. It should be one of the poster children of scale-out software and is one of the representatives of the so called "Big Data" technologies. Thanks to Kirth Lammens, one of the talented researchers at my lab, we have developed a benchmark that searches through all the Wikipedia content (+/- 40GB). Elasticsearch is – like many Big Data technologies – built on Java (we use the 64-bit server version 1.7.0).

The term "Big data" almost immediately suggests that you need massive machines, more like the new Xeon E7 which supports up to 6 TB. In reality, many big data analyses are running on top of very humble machines in a cluster. ElasticSearch is such an an application: the underlying Java technology does not work well with a larger than 32 GB heap. A total of 64 GB RAM is considered as the sweet spot, to leave some RAM space for filesystem caching.

The result of the Xeon D is stunning. The Xeon D is no less than 70% faster than the fastest Xeon E3s. Better performance is possible with the Xeon E5, but the price tag of those servers is not comparable to the Xeon D servers. The Xeon D-1540 (and as a result the SYS-5028D-TN4T) is the performance per dollar champ here.

Saving Power

Efficiency is very important in many scenarios, so let's start by checking out idle power consumption. Most of our servers have a different form factor. Some (X-Gene, Atom c2750 in HP Moonshot) are micro-servers sharing a common PSU and some are based upon a motherboard that has a lot of storage interfaces (the C2750 server, the SYS-5028D-TN4T). Our Xeon E5 was running inside a heavy 2U rackserver, so we did not include those power readings.

But with some smart measurements, some deductions and a large grain of salt we can get somewhere. We ask our readers to take some time to analyze the measurements below.

(*) Calculated as if the Xeon E3 was run in an "m300-ish" board.

(**) See our comments

The server based upon the ASUS P9D-MH is the most feature rich board in our comparison. The C2750 measurements show how much difference a certain board can make - the Asrock C2750D4I which targets the storage market needs 31 W, and by comparison the m300 micro server inside the HP Moonshot needs only 11 W. The last measurement does not include the losses of the PSUs, but it still shows how much difference, even in idle, the board makes.

The board inside the supermicro SYS-5028D-TN4T - the Supermicro X10SDV-TLN4F - is a bit more compact than the Asus P9D-MH, and is very similar to the ASRock C2750D4I. But inside SYS-5028D-TN4T we also find a storage backplane and a large fan in the back. So we disabled several components to find out what their impact wass.

- 0.5 Watt for the large fan in the back of chassis

- 0.5 Watt for the fan on top of the heatsink

- 0.5 Watt for the storage backplane

- 3.5 Watt for 10 Gbit Ethernet PHY

In order to make the Supermicro similar to the C2750DI, we disable the large fan, we removed the storage backplane and disable the 10 Gb Ethernet PHY in BIOS. The result was that the idle power lowered from 31W to 27W. The only difference was that the Asrock C2750D4I uses a large passive heatsink and the Supermicro X10SDV-TLN4F uses a small fan. We found out that the fan uses about 0.5 Watt, so we have reason to believe that the Xeon D consumes slightly less or similar at idle than the Atom C2750.

For those who have missed our review of the X-Gene 1, remember that the software ecosystem for ARM is not ready yet (ACPI and PCIe support) and that the Ubuntu running on top of the X-Gene was not the vanilla Ubuntu 14.04 but a customized/patched one. Also the X-Gene 1 is baked with an older 40 nm process.

Web Infrastructure Power consumption

Next we tested the system under load.

(*) measured/calculated to mimic a Xeon-E3 "m300-ish" board.

Let us entangle the results by separating the power that goes to the SoC and the power that goes to the system. We did a similar though experiment in our X-Gene 1, Atom C2000 and Xeon E3 comparison.

| Power Consumption SoC Calculations | ||

| SoC | Power Delta = Power Web - Idle (W) |

Power SoC = Power Delta + Idle SoC + Chipset (W) |

| Xeon E3- 1240 v3 3.4 | 95-42 = 53 | 53+3+3 = 59 |

| Xeon E3-1230L v2 1.8 | 68-41 = 27 (45-18 = 27) |

27+3+3 = 33 |

| Xeon D-1540 | 73-31 = 42 | 42+2+0 = 44 |

| Atom C2750 2.4 | 25-11 = 13 | 13+3+0 = 16 |

Now let us combine our calculated SoC power consumption and the power measurements in the graph above. The Atom C2750 still make sense in a micro server if CPU performance is not a priority: think static webservers and caching servers. You can fit an Atom C2750 server inside a power envelop of 25W as HP has proven. Based upon our own experience, such a Xeon D system would probably require more like 55 - 60 W.

If CPU performance is somewhat important, the Xeon D is the absolute champion. A Xeon E3-1230L server with similar features (2x 10 Gb for example) will probably consume almost the same amount of power as we have witnessed on our Asus P9D board (68 W). Given a decently scaling application with enough threads or some kind of virtualization (KVM/Hyper-V/Docker), the Xeon D server will thus consume at most about 1/3 more than an Xeon E3-1230L, but deliver almost twice as much performance.

Conclusion: the Xeon D-1540 is awesome

If you only look at the integer performance of a single Broadwell core, the improvement over the Haswell based core is close to boring. But the fact that Intel was able to combine 8 of them together with dual 10 Gbit, 4 USB 3.0 controllers, 6 SATA 3 controller and quite a bit more inside a SoC that needs less than 45 W makes it an amazing product. In fact we have not seen such massive improvements from one Intel generation to another since the launch of the Xeon 5500. The performance per watt of a Xeon D-1540 is 50% better than the Haswell based E3 (Xeon E3-1230L).

Most of the design wins of the Xeon D are network and storage devices and, to a lesser degree, micro servers. Intel also positions the Xeon D machines at the Datacenter/Network edge, even as an IOT gateway.

Now, granted, market positioning slides are all about short powerful messages and leave little room for nuance. But since we have room for lengthier commentaries, our job is to talk about nuances. So we feel the Xeon D can do a lot more. It can be a mid range java server, text search engine or high-end development machine. In can be a node inside a web server cluster that takes heavy traffic.

In fact the Xeon D-1540 ($581) makes the low end of the Xeon E5 SKUs such as the E5-2630 (6 cores at 2.3 GHz, 95 W, $612) look pretty bad for a lot of workloads. Why would you pay more for such E5 server that consumes a lot more? The answer is some HPC applications, as our results show. The only advantage such a low end dual socket E5 server has is memory capacity and the fact that you can use two of them (up to 12 cores).

So as long as you do not make the mistake to use it for memory intensive HPC applications (note most HPC apps are memory intensive) and 8 cores is enough for you, the Xeon D is probably the most awesome product Intel has delivered in years, even if it is slightly hidden away from the mainstream.

Where does this leave the ARM server plans?

The Xeon D effectively puts a big almost unbreakable lock on some parts of the server market in the short and mid term (as Intel will undoubtably further improve the Xeon D line). It is hard to see how anyone can offer an server SoC in the short term that can beat the sky high performance per watt ratio when performing dynamic web serving for example.

However, the pricing and power envelope (about 60W in total for a "micro" server) of the Xeon D still leaves quite a bit of room in markets where density and pricing is everything. You do not need Xeon D power to run a caching or static web server as an Atom C2000-level of performance and a lot of DRAM slots will do. There are some chances here, but we would really like to see some real products instead of yet another slide deck with great promises. Frankly we don't think that the standard ARM designs will do. The A57 is probably not strong enough for the "non-micro server" market and it remains to be seen if the A72 will a large enough improvement. More specialized designs such as Cavium Thunder-X, Qualcomms Kryo or Broadcomm Vulcan might still capture a niche market in the foreseeable future.